Microsoft Sentinel Detection as Code

Introduction

Microsoft Sentinel detection engineering has long been a manual, portal-centric process that creates operational challenges for security teams. This detection-as-code framework transforms Sentinel operations into a repeatable, testable, and auditable software engineering discipline. By leveraging Terraform’s infrastructure-as-code (IaC) principles, teams can now manage detection rules, automation workflows, hunting queries, watchlists, and playbooks through version-controlled code with proper peer review and Continuous Integration and Continuous Delivery/Development/Deployment (CI/CD) processes.

The framework addresses the gap between security engineering best practices and the realities of operating a modern SIEM at scale. Whether you’re a security engineer building detection logic, a platform engineer ensuring reliable deployments, an incident responder needing consistent automation, or a threat hunter developing new queries, this approach provides the foundation for collaborative, maintainable security operations.

This framework was highly inspired by a .conf talk I watched from Splunk .conf23, specifically SEC1847A. I’m unable to find the video for it but this links to slides. I was amazed by the possibility of writing a single file that resulted in a validated alert being pushed to Splunk, along with automated documentation. Using a Version Control System (VCS) also empowered a peer review and upskilling culture I believe since it requires colleagues to review your detections. This would result in reinforcement of efficient queries, or in theory a learning opportunity for someone to improve.

TLDR: If you just want the code to get started it can be found here: GitHub Repository

Problem Statement

The traditional Sentinel management through the Azure portal creates several critical operational challenges that compound as teams and detection portfolios grow:

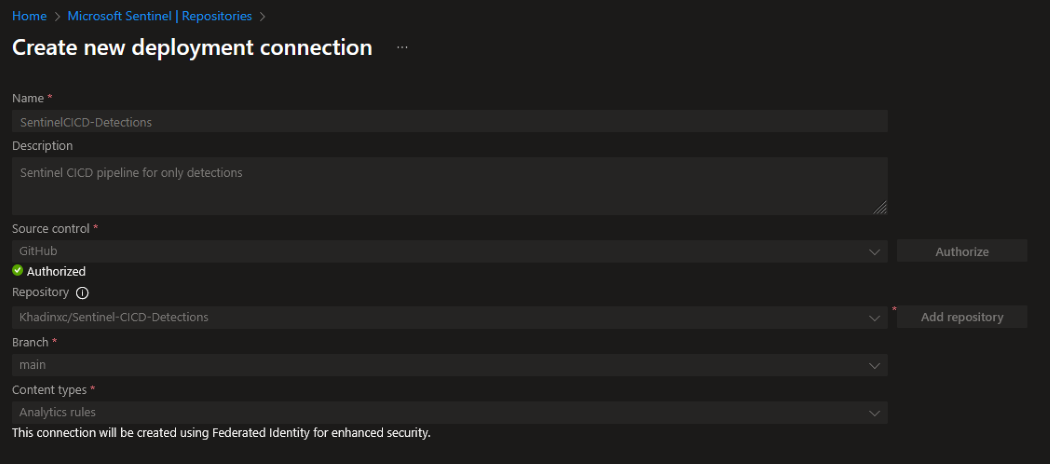

State Management Crisis: The Out of the Box experience available from Microsoft Sentinel includes “Repositories” which automates some integration to either GitHub or Azure DevOps from Microsoft Sentinel by generating an Application Registration for the VCS, and adding a pipeline action that triggers whenever a commit event occurs. The primary issue I discovered was the complete lack of state tracking. In an enterprise set up with potentially a 100 different pieces of content in Sentinel this pipeline redeploys all detections every commit. Which you can imagine leads to drawn out deployment times and potentially a loss of metadata associated to rules by being destroyed every time a new rule is deployed. Related to this is a lack of resource tracking if a rule is manually deployed to Azure you are unable to export this without some scripting to interact with the Azure API.

Deploying Microsoft Sentinel Repositories

Deploying Microsoft Sentinel Repositories

Version Control Vacuum: The portal provides no meaningful change tracking. Understanding who changed what rule, when they changed it, and why requires parsing through activity logs that provide limited context. Rolling back to previous configurations or understanding the evolution of detection logic becomes nearly impossible. An issue faced in the past when audits come around is change tracking around alerts. This is addressed by restricting modifications to be completed by one source of truth.

Documentation Decay: Detection context, ownership, rationale, and operational notes live in disparate systems or not at all. Rules deployed months ago become orphaned artifacts with unclear purpose or maintenance responsibility. Knowledge transfer becomes tribal, and detection effectiveness degrades over time due to lack of context.

Collaboration Friction: Multiple team members cannot safely work on detection rules simultaneously. There’s no peer review process for changes, no testing pipeline for validation, and no systematic way to share knowledge or maintain coding standards across the team.

This framework replaces these manual processes with software engineering practices proven in application development: infrastructure as code through Terraform, version control through Git, automated testing and validation, peer review through pull requests, and automatic documentation generation that keeps pace with code changes.

Requirements

I put together some requirements to adhere to throughout the development process of this framework to meet practical constraints and operational realities of enterprise security teams:

Scalability: Support for hundreds of detection rules across multiple workspaces without performance degradation. The modular architecture allows teams to start small and grow incrementally without architectural debt.

Modularity: Components can be enabled or disabled independently based on team needs and organisational constraints. Teams can adopt analytics rules without automation, or implement watchlists without playbooks, allowing for phased implementation approaches.

Repeatability: Terraform plans should be predictable and applies should converge to desired state regardless of current configuration. This enables safe rollbacks, confident promotions, and reliable disaster recovery scenarios.

Environment Awareness: Support for development, testing, and production environments with appropriate isolation, promotion workflows, and environment-specific configurations. Changes flow through environments with proper gates and validation.

Auditability: Complete change history through Git commits, pull request reviews, and Terraform state logs. Every change is traceable to an individual, timestamp, and business justification. This makes your auditors life easy and streamlines reporting.

Low-friction Authoring: Security engineers should focus on detection logic, not infrastructure boilerplate. YAML-first authoring with strong validation provides immediate feedback without requiring deep Terraform knowledge.

Operational Safety: Built-in validation, testing hooks, and approval workflows prevent dangerous changes from reaching production. Drift detection identifies when manual changes violate the desired state.

Extensibility: New artifact types, providers, or integration points can be added without breaking existing functionality. The framework should grow with evolving security needs and Azure platform capabilities.

Technology Stack

The framework leverages a carefully selected technology stack designed for reliability, maintainability, and security team workflows:

Infrastructure as Code Foundation:

- Terraform serves as the primary orchestration engine, providing idempotent deployments and state management

- AzureRM Provider handles native Sentinel resources including analytics rules, automation rules, watchlists, and data connectors

- AzAPI Provider fills gaps where the AzureRM provider lags, particularly for custom table creation and newer Azure features

Content Management:

- YAML-first authoring for detection rules, automation workflows, and hunting queries, providing human-readable definitions with strong schema validation. SIGMA rules are based on YAML also, which is a widely recognised detection standard.

- CSV-based watchlists with accompanying metadata for threat intelligence and operational reference data.

- Structured metadata capturing tactics, techniques, severity levels, ownership, and operational context.

State and Deployment:

- HCP Terraform or Terraform Cloud for remote state management, policy enforcement, and approval workflows

- GitHub Actions or Azure DevOps for CI/CD pipelines providing validation, planning, and automated deployment

- Environment-specific configurations through Terraform variables and workspace management

Integration and Automation:

- PowerShell scripting for content validation, format conversion, and operational utilities. Az cli is a key component for a lot of authentication to interact with the Azure API, PowerShell (surprise surprise), has a lot of Microsoft supported modules and generally connects well to Azure.

- Logic Apps and Azure Automation for playbook orchestration and incident response workflows

- REST API integration for bridging gaps between Terraform and Azure services

Documentation and Observability:

- Automated Detection Wiki generation from content metadata and deployment state

- Terraform state tracking for drift detection and compliance reporting

- Structured logging throughout the deployment pipeline for operational visibility

Ways of Working

The framework establishes clear workflows and conventions that enable teams to collaborate effectively on detection engineering. These patterns have evolved from practical experience managing hundreds of detection rules across multiple environments.

Content Structure and Organisation

All detection content follows a standardised directory structure under the content/ folder, with each artifact type in its own subdirectory:

content/

├── scheduled-rules/ # Scheduled analytics rules (KQL-based detections)

├── nrt-rules/ # Near real-time rules (sub-minute detection)

├── microsoft-rules/ # Microsoft security product integration rules

├── automation-rules/ # Incident response automation workflows

├── hunting-queries/ # Proactive threat hunting queries

├── playbooks/ # Incident response playbooks and scripts

├── watchlists/ # Threat intelligence and reference data

└── threat-intel-rules/ # STIX/TAXII threat intelligence rulesYAML-First Detection Authoring

Detection rules are authored in human-readable YAML format with strong schema validation. This approach reduces cognitive load and enables security engineers to focus on detection logic rather than infrastructure syntax.

Scheduled Analytics Rule Example:

display_name: "Malware Detection from Defender"

description: "Detects malware alerts from Microsoft Defender"

severity: "High"

enabled: true

query: |

SecurityAlert

| where TimeGenerated > ago(1h)

| where AlertName contains "malware" or AlertName contains "virus"

| where AlertSeverity in ("High", "Medium")

| project TimeGenerated, AlertName, AlertSeverity, CompromisedEntity, Description

query_frequency: "PT1H"

query_period: "PT1H"

trigger_operator: "GreaterThan"

trigger_threshold: 0

suppression_enabled: false

suppression_duration: "PT1H"

tactics:

- "Execution"

- "DefenseEvasion"

techniques:

- "T1059"

- "T1027"

entity_mappings:

- entity_type: "Host"

field_mappings:

- identifier: "HostName"

column_name: "CompromisedEntity"

incident_configuration:

create_incident: true

grouping_enabled: false

custom_details: {}

event_grouping:

aggregation_method: "SingleAlert"Automation Rule Workflows

Automation rules enable consistent incident response patterns through declarative configuration:

display_name: "High Severity Auto Assignment"

description: "Automatically assign high severity incidents to the security team"

enabled: true

order: 100

conditions:

- property: "IncidentSeverity"

operator: "Equals"

values: ["High"]

actions:

- order: 1

action_type: "ModifyProperties"

assignee: "security-team@company.com"

status: "Active"

labels: ["auto-assigned", "high-priority"]

tags:

Team: "Security"

Purpose: "Auto-Assignment"

Environment: "dev"Rule Templates

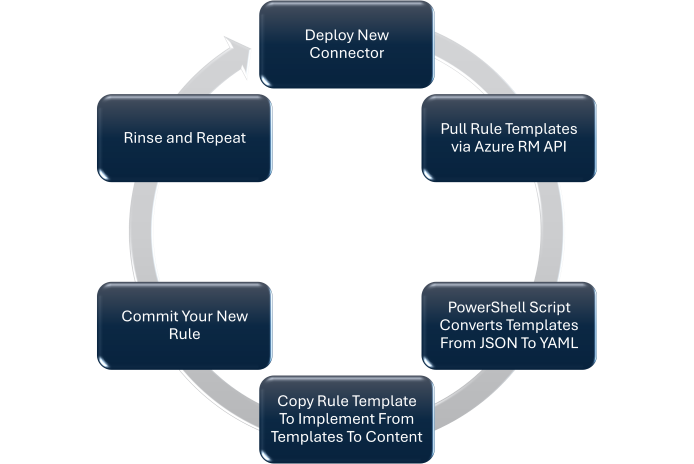

Overengineering simple click ops was something I thought about, it should be easy enough once you click enable a new data connector and your new shiny rule templates appear. It seems like the easier option to just click enable. I thought about this when trying to establish some baseline workflows and developed a PowerShell script that queries the Azure API to pull down and parse templates into a YAML format. These YAML templates appear in the templates\YAML directory for you to work out of.

PS D:\Projects\SentinelxTerraform-DaC> tree .\templates\

D:\PROJECTS\SENTINELXTERRAFORM-DAC\TEMPLATES

├───ARM

│ ├───FusionRules

│ ├───MicrosoftRules

│ ├───MLBehaviorAnalyticsRules

│ ├───NRTRules

│ ├───Resources

│ ├───ScheduledRules

│ └───ThreatIntelligenceRules

└───YAML

├───Fusion

├───MicrosoftSecurityIncidentCreation

├───MLBehaviorAnalytics

├───NRT

├───Scheduled

└───ThreatIntelligenceA simple diagram showing the circular workflow for onboarding new data connectors and converting their templates into manageable detection rules:

This circular workflow ensures that new data sources are quickly converted into actionable detection rules while maintaining code quality and operational standards.

Git-Based Collaboration Workflow

The framework enforces a structured Git workflow providing all the benefits of the SDLC to the detection engineering workflow:

Development Process:

- Feature Branch Creation:

git checkout -b feature/project-x-use-case-development - Content Authoring: Create or modify YAML files in appropriate

content/subdirectories - Local Validation: Run content validation scripts before committing

- Pull Request: Submit changes through GitHub/Azure DevOps pull request

- Automated Validation: CI pipeline validates YAML schema, KQL syntax, and Terraform plans

- Peer Review: Team members review detection logic, metadata, and deployment impact

- Terraform Plan: Automated plan generation shows exact infrastructure changes

- Approval Gate: Senior team members approve before merge to main branch

- Automated Deployment: Merge triggers deployment pipeline with proper environment promotion

Environment Promotion Strategy

Changes can flow through environments with increasing validation and approval requirements if you so choose to, without overengineering things too much a simple single main branch with creation of branches for projects requiring new detections then merge requests to the main branch can be done but you can also utilise multiple environments:

Development Environment:

- Immediate deployment on merge to

mainbranch - Relaxed validation for rapid iteration

- Synthetic test data injection for rule validation

Testing Environment:

- Scheduled deployment (daily) from

mainbranch - Full validation suite including performance testing

- Production-like data volumes for realistic testing

Production Environment:

- Manual approval required for deployment

- Change advisory board review for high-impact modifications

- Rollback procedures tested and documented

Metadata-Driven Documentation

Every detection rule includes structured metadata that automatically generates comprehensive documentation:

# Standard metadata fields

display_name: "Human-readable rule name"

description: "Detailed explanation of detection logic and purpose"

author: "detection-engineer@company.com"

date_created: "2025-01-15"

last_updated: "2025-01-20"

severity: "High|Medium|Low|Informational"

confidence: "High|Medium|Low"

tags:

data_source: ["SecurityEvent", "Syslog"]

attack_technique: ["T1078", "T1110"]

detection_method: ["Threshold", "Statistical"]

false_positives: "Known scenarios that may trigger false alerts"

response_plan: "Recommended analyst response and escalation procedures"

references:

- "https://attack.mitre.org/techniques/T1078/"

- "https://github.kaiber/sentinel-detection-rules"Automated Documentation Generation

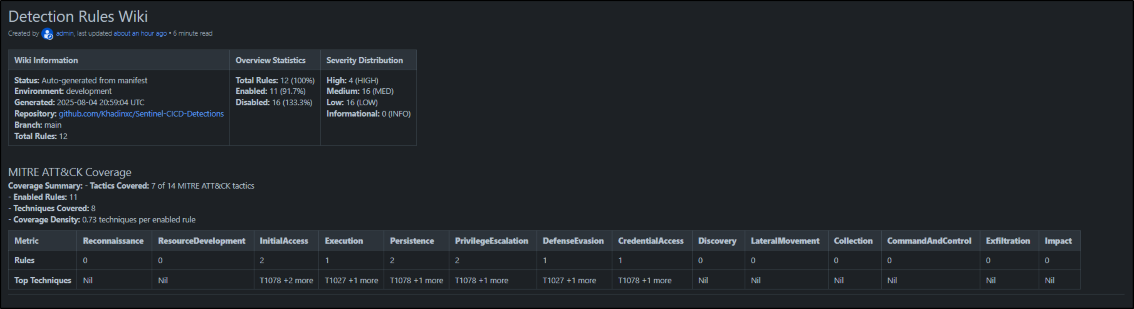

A key feature I wanted to develop as part of this project was a comprehensive Detection Wiki system (inspired by the .conf23 talk where they utilise Gitbook for their technology stack) that automatically generates documentation from your detection rules, this way cyber folk in your organisation can spend more time preparing and responding to threats rather than writing documentation without it impacting traceability and documentation:

A comprehensive system that generates Confluence-compatible documentation from Microsoft Sentinel detection rules.

🎨 Wiki Sample Screenshots

Professional dashboard with statistics, MITRE ATT&CK coverage analysis, and rule distribution

Professional dashboard with statistics, MITRE ATT&CK coverage analysis, and rule distribution

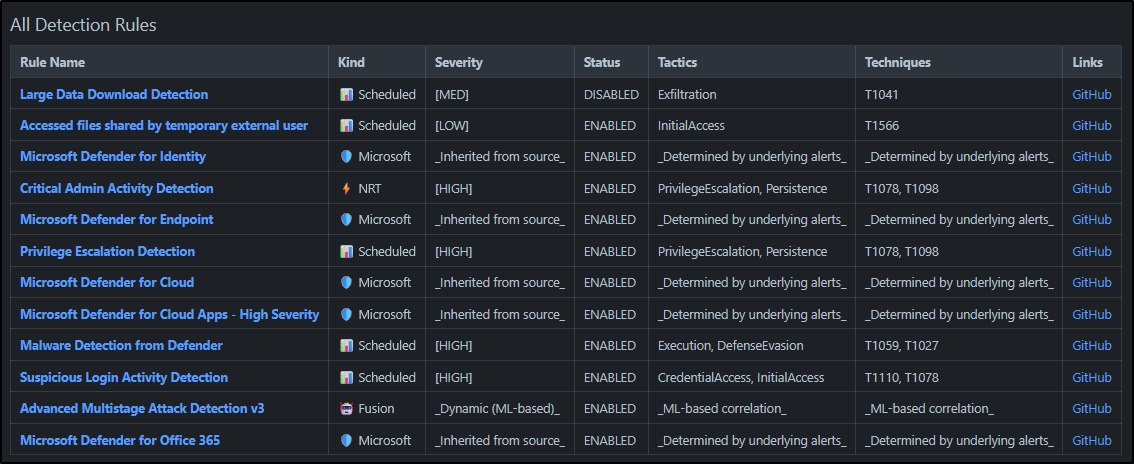

Complete rule inventory with severity, status, tactics, techniques, and GitHub links

Complete rule inventory with severity, status, tactics, techniques, and GitHub links

Comprehensive rule details with MITRE mappings, source links, and technical specifications

Detection Rule Lifecycle Management

The framework supports full lifecycle management of detection rules:

Creation Phase:

- Template-based rule scaffolding with required metadata

- Peer review for detection logic and business justification

- Testing against historical data before production deployment

Active Monitoring Phase:

- Automated performance metrics collection (execution time, hit rate)

- False positive rate tracking and trending

- Regular effectiveness review cycles

Maintenance Phase:

- Scheduled metadata review and updates

- Performance optimisation based on telemetry

- Deprecation planning for outdated detection logic

Retirement Phase:

- Graceful disabling with impact assessment

- Historical data preservation for compliance

- Knowledge transfer and lessons learned documentation

Quality Assurance Practices

Pre-commit Validation:

- YAML schema validation ensures structural correctness

- KQL syntax validation catches query errors early

- Metadata completeness checks prevent incomplete submissions

Automated Testing:

- Unit tests for individual KQL queries with known datasets

- Integration tests for multi-rule correlation scenarios

- Performance regression tests to prevent resource-intensive queries

Operational Monitoring:

- Detection rule health dashboards with key performance indicators

- Alert fatigue metrics and analyst feedback integration

- Cost optimisation reporting for expensive queries

This structured approach transforms detection engineering from an ad-hoc, individual activity into a collaborative, measurable engineering discipline that scales with organisational growth and complexity.

Problems Encountered

Building production-ready detection-as-code for Sentinel revealed several technical challenges and platform limitations that shaped the final architecture:

Provider Maturity and API Changes: Azure’s rapid evolution creates ongoing maintenance overhead. The AzureRM provider regularly deprecates arguments and changes resource schemas. Recent examples include automation rule condition blocks requiring migration to condition_json format, and analytics rule incident configuration restructuring. These changes require proactive monitoring and refactoring to maintain compatibility.

Identity, Access, and API Schema Strictness: Playbook integration requires careful consideration of managed identities, service principals, and cross-tenant scenarios. Logic Apps triggered by Sentinel rules need proper authentication context, and Azure Automation runbooks require secure credential management. These requirements add operational complexity and security considerations.

Azure’s REST APIs can be unforgiving about data structure and format requirements. The automation rules condition_json parameter, for example, requires specific nested object structures that don’t align with Terraform’s natural data modeling. Working around these constraints often requires custom JSON encoding and careful schema validation.

Feature Coverage Gaps: Terraform provider coverage lags behind Azure platform features. Custom tables, advanced connector configurations, and newer Sentinel capabilities often require the AzAPI provider or external API calls. Some features like Jupyter notebook management remain outside Terraform scope entirely.

Platform Deprecations: Microsoft’s migration strategy for features affects long-term planning. Fusion rules deprecation during the Defender portal migration required proactive removal from the framework. Staying ahead of platform evolution requires monitoring roadmaps and planning migrations before forced obsolescence.

Scale and Performance Constraints: Large watchlist updates can trigger API throttling. Deploying hundreds of rules simultaneously may hit rate limits. The framework includes batching strategies and retry logic, but teams need awareness of these constraints when planning deployments.

Import and Migration Challenges: Converting existing portal-managed content to code requires significant data normalisation effort. Inconsistent naming, missing metadata, and undocumented dependencies make migration time-intensive. The framework provides utilities to assist, but manual curation remains necessary.

These challenges reinforced the importance of validation pipelines, incremental adoption strategies, and maintaining flexibility for platform evolution.

Architecture Benefits and Design Philosophy

This framework embodies several key principles that differentiate it from traditional SIEM management approaches:

Declarative Configuration: Rather than scripting imperative deployment steps, the framework declares desired end state and lets Terraform handle the execution path. This reduces deployment complexity and improves reliability across different environments and scenarios.

Immutable Deployments: Changes flow through version control and CI/CD rather than direct production modification. This prevents configuration drift and ensures all environments remain synchronised with the authoritative code repository.

Separation of Concerns: Detection logic, infrastructure configuration, and operational metadata exist in separate, focused modules. This enables different team members to contribute their expertise without stepping on each other’s work.

Progressive Enhancement: Teams can adopt components incrementally based on organisational readiness and technical constraints. Starting with basic rule management and adding automation, documentation, and advanced features over time provides a smooth adoption path.

Operational Visibility: Every aspect of the system generates telemetry and maintains audit trails. Understanding what changed, when, and why becomes straightforward rather than requiring forensic investigation.

Getting Started

If you would like to deploy something like this in your organisation. You should consider a phased approach to initiating the detection-as-code journey this builds confidence and demonstrates value incrementally:

Phase 1 - Foundation: Establish Terraform state management and convert a small subset of critical detection rules to code. Focus on rules that change frequently or require coordination across environments.

Phase 2 - Workflow: Implement Git workflows with pull request reviews and basic CI/CD validation. This establishes the collaboration patterns that drive long-term success.

Phase 3 - Documentation: Enable automatic Detection Wiki generation to demonstrate the value of structured metadata and living documentation that stays current with code changes.

Phase 4 - Automation: Add automation rules, playbooks, and operational workflows to show how the framework scales beyond basic detection management.

Phase 5 - Advanced Features: Incorporate threat intelligence automation, testing frameworks, and performance monitoring as the foundation matures.

This incremental approach allows teams to realise immediate value while building the organisational capability to support more sophisticated security engineering practices over time.

The detection-as-code paradigm represents a fundamental shift in how security teams approach SIEM operations. By treating security logic as software and applying proven engineering practices, organisations can achieve the reliability, scalability, and collaboration capabilities necessary for modern threat detection at enterprise scale.

Future State

The roadmap focuses on expanding the framework’s capabilities to support comprehensive security operations and advanced detection engineering workflows:

Adversary Emulation Integration: Deploy and manage a MITRE Caldera server or Atomic Red Team alongside Sentinel to enable complex use case development. Threat informed analytics stem from analysis of your threat landscape and identifying Threat Actors (TAs) that generally target your environment. Leveraging this information you can implement threat scenarios/attack campaigns in your adversary emulator to replicate these complex use cases. This ensures your rules fire when a real scenario happens by simulating it as close to the real thing as possible. Automated campaign execution with results correlation back to detection rule effectiveness provides quantitative measurement of security posture. Coverage maps highlighting gaps in ATT&CK technique detection guide detection engineering priorities.

Threat Intelligence at Scale: Native TAXII server integration for enabling cyber threat intelligence analysts to quickly triage indicators to threat intelligence sharing communities via industry recognised protocols (TAXII) in standardised formats (STIX). The ability to spin up infrastructure that complements security solutions utilising IaC, minimises time spent on manual configuration and increases the time available for disseminating high-confidence Indicators of Compromise.

Detection Testing and Quality Assurance: Comprehensive KQL unit testing framework with sample telemetry injection and baseline performance measurement. Golden query regression testing catches logic changes that alter detection behavior unexpectedly. Performance thresholds prevent resource-intensive rules from impacting platform stability.

Future Proof Your Security Operations

The detection-as-code approach represents more than just a technical upgrade, it’s a shift toward treating security operations with the same engineering rigor as software development. If you’re tired of manual portal management, inconsistent deployments, and tribal knowledge, this framework provides the foundation for scalable, maintainable security operations.

Get Started Today

Ready to implement detection-as-code in your environment? Here’s how to begin:

-

Explore the Framework: Visit the GitHub repository to examine the complete codebase, documentation, and example configurations.

-

Start Small: Begin with a single detection rule migration to prove the concept and build confidence within your team.

-

Join the Discussion: Have questions or want to share your implementation experience? Connect with me on GitHub or reach out via email.

-

Contribute Back: Found improvements or additional use cases? Pull requests and community contributions help make the framework even better for everyone.

The future of security operations is code-driven, collaborative, and scalable. Take the first step toward streamlined deployment to your Sentinel environment from click ops hell to engineering excellence.

Keen to dive in? Clone the repo and begin your detection-as-code journey today.